Click here to try it out.

Overview

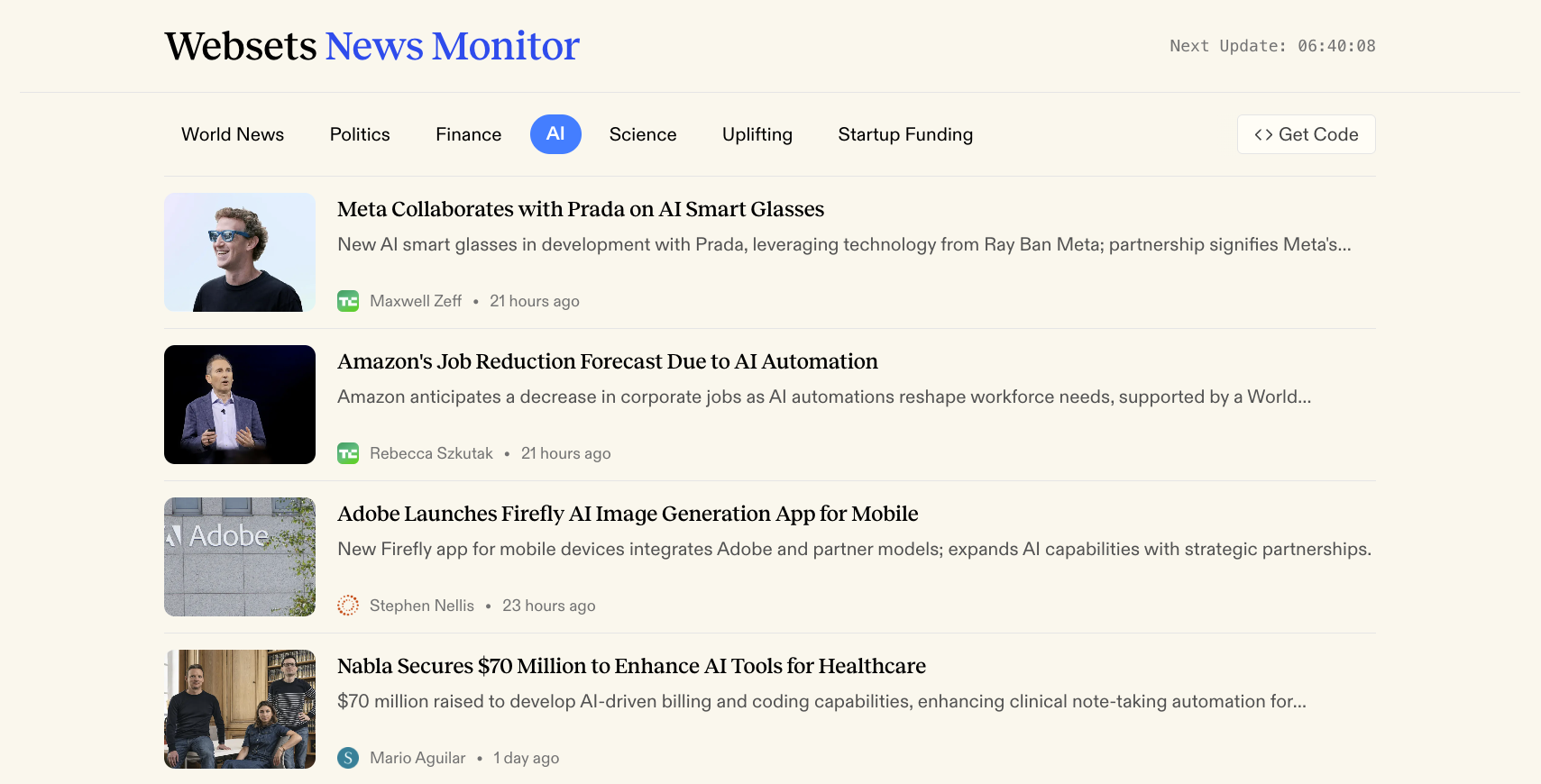

We created a Websets News Monitor that uses the Websets API to monitor the web semantically for queries like “startup funding round announcements” or “new product launches.” Each tab uses a different Webset that updates daily using a monitor. It demonstrates best practices for news monitoring including:- Deduplicating articles about the same story

- Filtering out low-quality data sources

- Receiving real-time updates via webhooks

How it Works

1

Set Up a Webhook

Webhooks allow you to subscribe to real-time updates as your Websets run. We want to know when a Webset is created and items finish enriching, so we’ll subscribe to

webset.created and webset.item.enriched.Javascript

Save

webhook.secret, we’ll use it later to validate incoming webhook requests.2

Create a Webset

Now we’ll create a Webset that searches for the types of articles we are looking for. Use

query to direct the search and criteria to narrow down the results.In this example we’re looking for articles about recent startup fundraises.Javascript

3

Monitor the Webset

We want our Webset to update with new articles daily, so we’ll create a monitor with the

webset.id. We set the cadence parameter to run daily and the search behavior so it looks for new results.By default, monitors use the last search the Webset ran. When we created the Webset we used “in the last 24 hours” so it’s always relative to when the monitor runs.Javascript

4

Handle the Webhook

Lastly, we need to create an endpoint to handle the webhook requests. We’ll setup a Next.js route to handle POST requests and parse the event data.For security purposes, you should verify the request’s signature using the webhook secret from the first step. See the signature verification guide for more info.

Javascript

View the full route implementation here.

Semantic Whitelisting

We want our feeds to contain high-quality links and avoid SEO spam. This would normally require manually maintaining lists of domains to include/exclude from your results, but with Websets it’s simple. You can create criteria that function as a semantic whitelist, telling the LLM what kinds of articles to allow. Here’s an example:Storyline Deduplication

A common issue when monitoring news is handling multiple articles about the same storyline. Often you want to group articles by storyline or remove duplicates so users don’t see repeated content. In our demo, we solve this using embeddings, vector search, and an LLM to classify duplicates.1

Embed the Article Title

First, we’ll embed the article’s title using OpenAI’s embedding API. We’ll use the

text-embedding-3-small model that produces vectors optimized for similarity comparisons.Javascript

2

Search for Similar Articles

Next, we use PostgreSQL’s

pgvector extension to find the 10 most similar articles from the last week.Javascript

3

Classify Duplicates with an LLM

Finally, we’ll use an LLM with structured outputs to classify whether the article is a duplicate. The LLM will look at the titles of similar articles and determine if they are about the same event.

Javascript